Taehyun ChoI am a final-year Ph.D. candidate at the Cognitive Machine Learning Laboratory at Department of Electrical and Computer Engineering at Seoul National University, advised by Jungwoo Lee. I received my B.S. in Mathematics from Korea University. E-mail: talium@cml.snu.ac.kr GitHub / Google Scholar / LinkedIn / CV |

|

Research InterestMy academic research focuses on sequential decision-making under uncertainty, particularly in the context of human feedback. I have extensively studied distributional reinforcement learning (distRL), reinforcement learning from human feedback (RLHF), and regret analysis, aiming to bridge theory and practice. Drawing inspiration from how humans make decisions, I aim to develop mathematical models and optimize for human-in-the-loop systems, uncovering both theoretical insights and practical algorithms for robust decision-making. Currently, I’m interested in reasoning LLM agents and regret-based decision theory. I’m actively looking for postdoctoral opportunities in theoretical foundations of reinforcement learning or reasoning LLM research. |

Preprints |

|

An Axiomatization of Process Score Model: Your Process-level Feedback is Not a RewardTaehyun Cho, Suhwan Kim, Seungyub Han, Seokhun Ju, Dohyeong Kim, Kyungjae Lee, Jungwoo Lee Work In Progress |

|

|

Off-policy Direct Preference Optimization with Monotonic Improvement GuaranteeSeungyub Han*, Taehyun Cho*, Seokhun Ju, Dohyeong Kim, Kyungjae Lee, Jungwoo Lee Work In Progress |

|

|

Policy Optimization with Process Regret ModelSuhwan Kim*, Taehyun Cho*, Seungyub Han, Seokhun Ju, Dohyeong Kim, Kyungjae Lee, Youngsoo Jang, Geonhyeong Kim, Yujin Kim, Moontae Lee, Jungwoo Lee" Work In Progress |

International Conference |

|

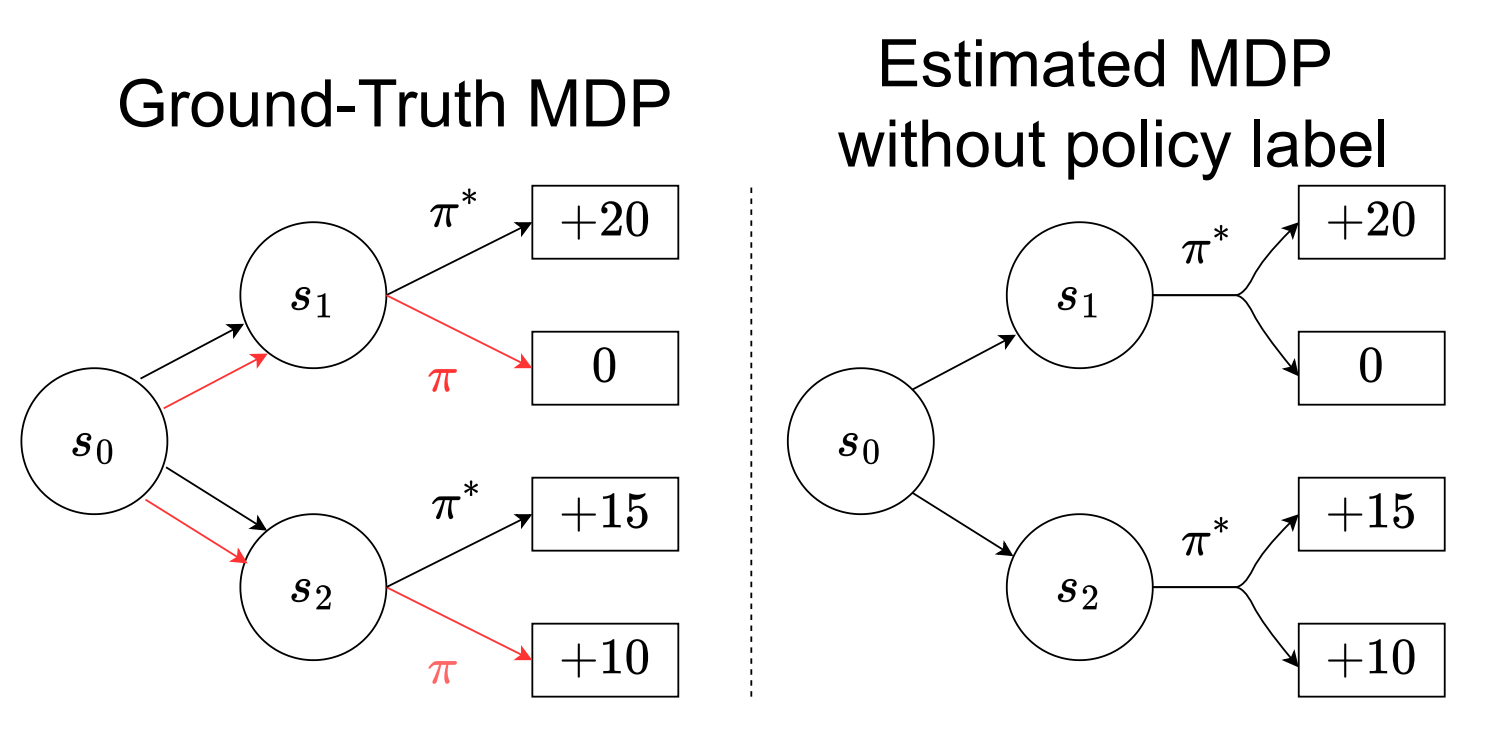

Policy-labeled Preference Learning: Is Preference Enough for RLHF?Taehyun Cho*, Seokhun Ju*, Seungyub Han, Dohyeong Kim, Kyungjae Lee, Jungwoo Lee ICML 2025 spotlight (Top 2.6%) paper / arxiv / |

|

Bellman Unbiasedness: Toward Provably Efficient Distributional Reinforcement Learning with General Value Function ApproximationTaehyun Cho, Seungyub Han, Kyungjae Lee, Seokhun Ju, Dohyeong Kim, Jungwoo Lee ICML 2025 paper / arxiv / |

|

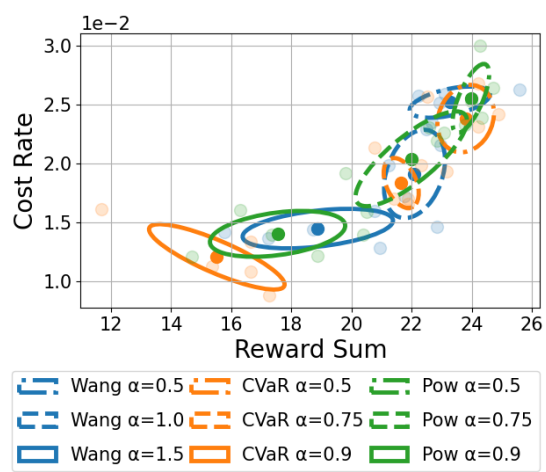

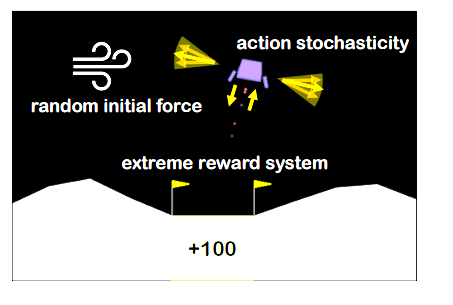

Spectral-Risk Safe Reinforcement Learning with Convergence GuaranteesDohyeong Kim, Taehyun Cho, Seungyub Han, Hojun Chung, Kyungjae Lee, Songhwai Oh NeurIPS 2024 paper / arxiv / |

|

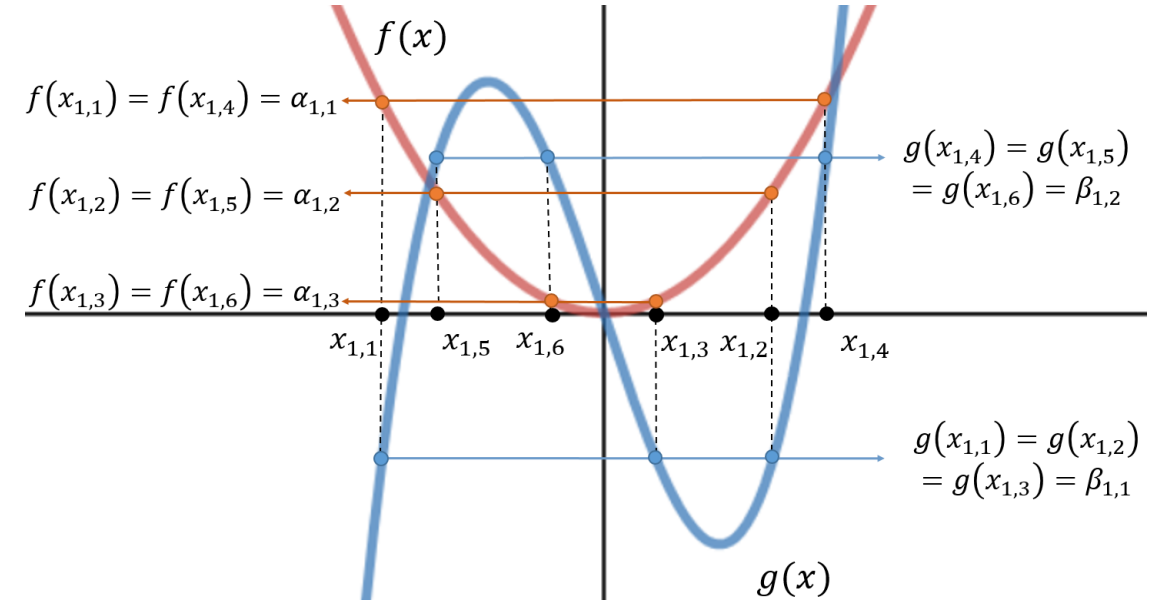

Pitfall of Optimism: Distributional Reinforcement Learning by Randomizing Risk CriterionTaehyun Cho, Seungyub Han, Heesoo Lee, Kyungjae Lee, Jungwoo Lee NeurIPS 2023 paper / arxiv / |

|

SPQR: Controlling Q-ensemble Independence with Spiked Random Model for Reinforcement LearningDohyeok Lee, Seungyub Han, Taehyun Cho, Jungwoo Lee NeurIPS 2023 paper / arxiv / code / |

|

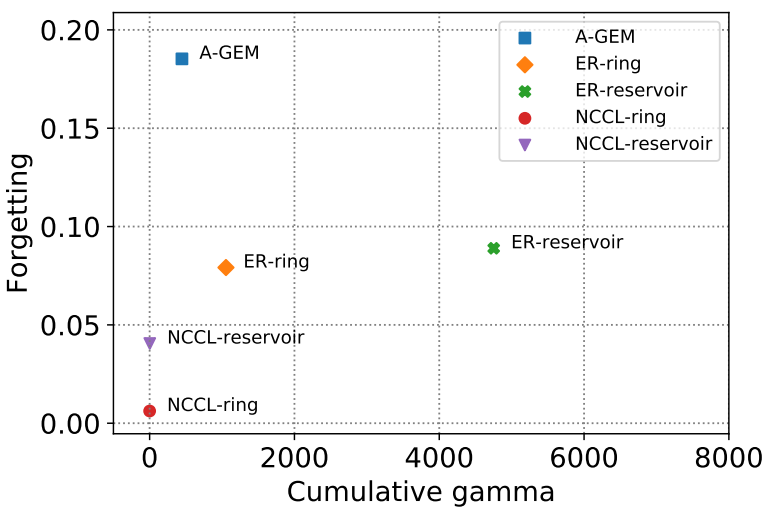

On the Convergence of Continual Learning with Adaptive MethodsSeungyub Han, Yeongmo Kim, Taehyun Cho, Jungwoo Lee UAI 2023 paper / arxiv / |

|

Adaptive Methods for Nonconvex Continual LearningSeungyub Han, Yeongmo Kim, Taehyun Cho, Jungwoo Lee NeurIPS 2022 Optimization for Machine Learning Workshop paper / |

|

Perturbed Quantile Regression for Distributional Reinforcement LearningTaehyun Cho, Seungyub Han, Heesoo Lee, Kyungjae Lee, Jungwoo Lee NeurIPS 2022 Deep RL Workshop paper / |

|

Chebyshev polynomial codes: Task entanglement-based coding for distributed matrix multiplicationSangwoo Hong, Heecheol Yang, Youngseok Yoon, Taehyun Cho, Jungwoo Lee ICML 2021 paper / arxiv / |

International Journal |

|

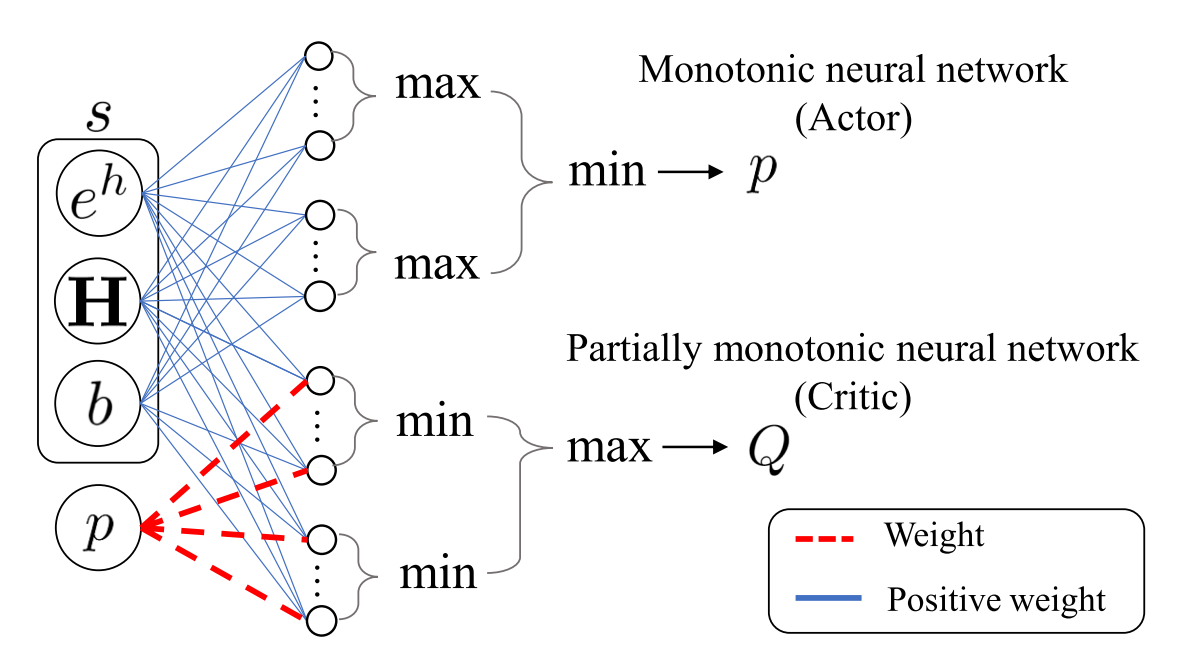

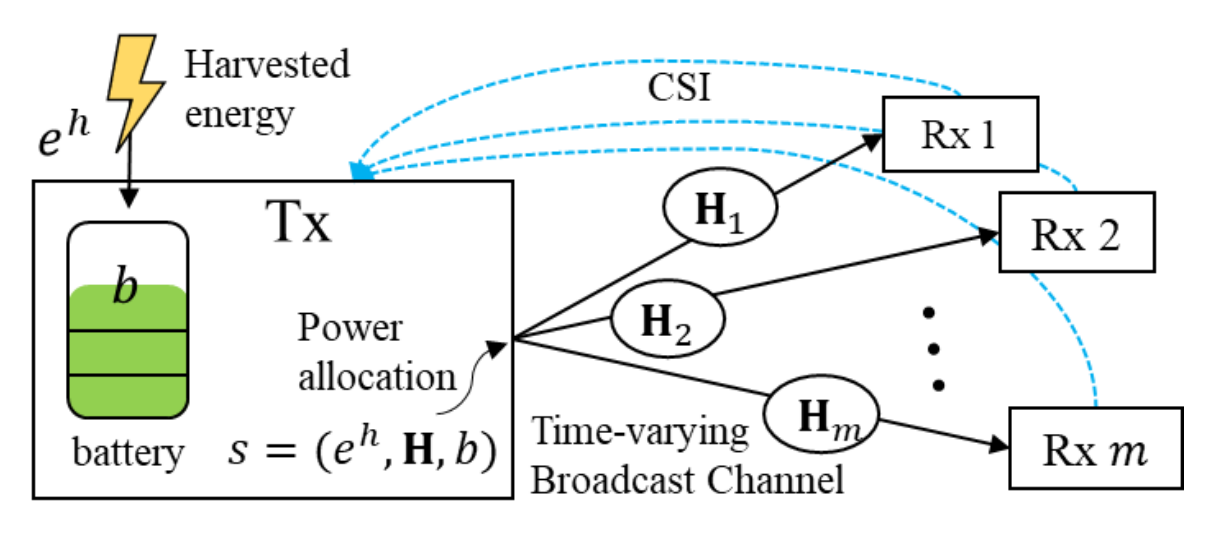

Optimized shallow neural networks for sum-rate maximization in energy harvesting downlink multiuser NOMA systemsHaesung Kim, Taehyun Cho, Jungwoo Lee, Wongae Shin, H Vincent Poor IEEE Journal on Selected Areas in Communications paper / arxiv / |

|

An Efficient Neural Network Architecture for Rate Maximization in Energy Harvesting Downlink ChannelsHaesung Kim, Taehyun Cho, Jungwoo Lee, Wonjae Shin, H Vincent Poor 2020 IEEE International Symposium on Information Theory (ISIT) paper / arxiv / |

|

Design and source code from Jon Barron's website |